Quick start with AWS Bedrock

We recommend using Anthropic Claude 4.5 Sonnet on Bedrock for strong reasoning and tool use.

Prerequisites

- An AWS account with Bedrock enabled in your region

- Access to the models you plan to use (e.g., Anthropic Claude) granted in the Bedrock console

- An AWS Bedrock API key

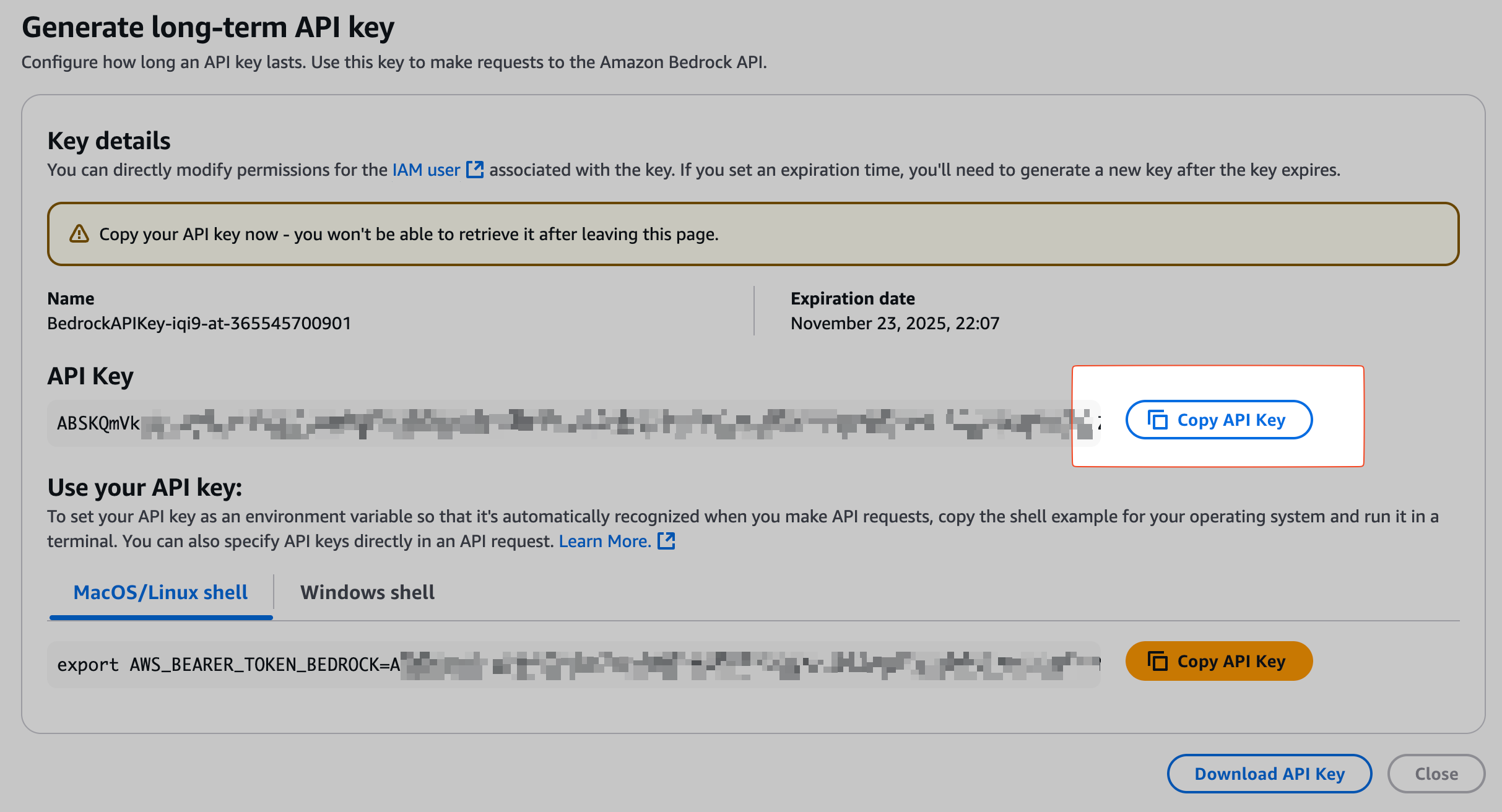

You could copy the API key from the console. We recommend using the long-term API key with longer expiration date, so you don't have to rotate the key too often.

You could copy the API key from the console. We recommend using the long-term API key with longer expiration date, so you don't have to rotate the key too often.

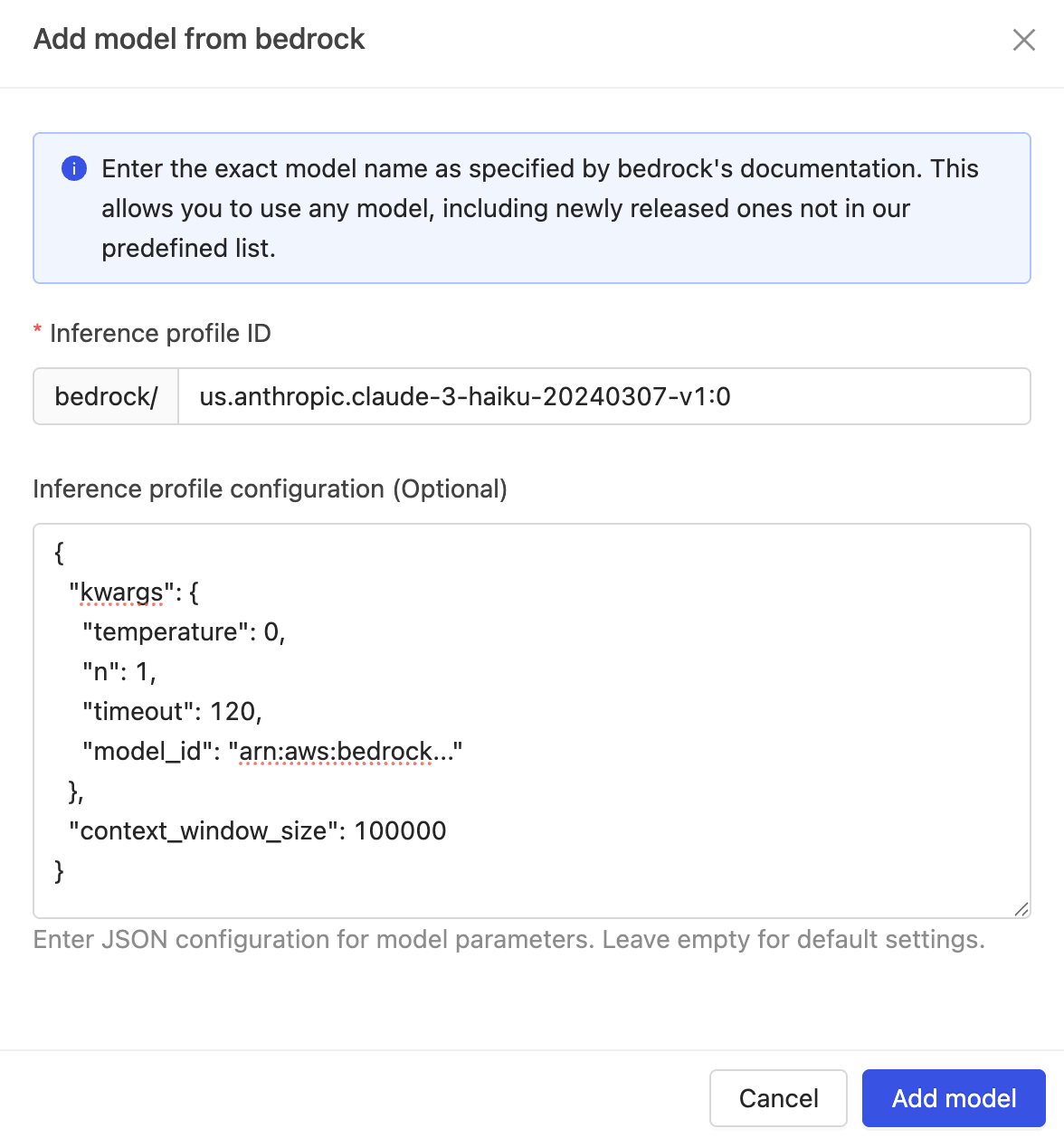

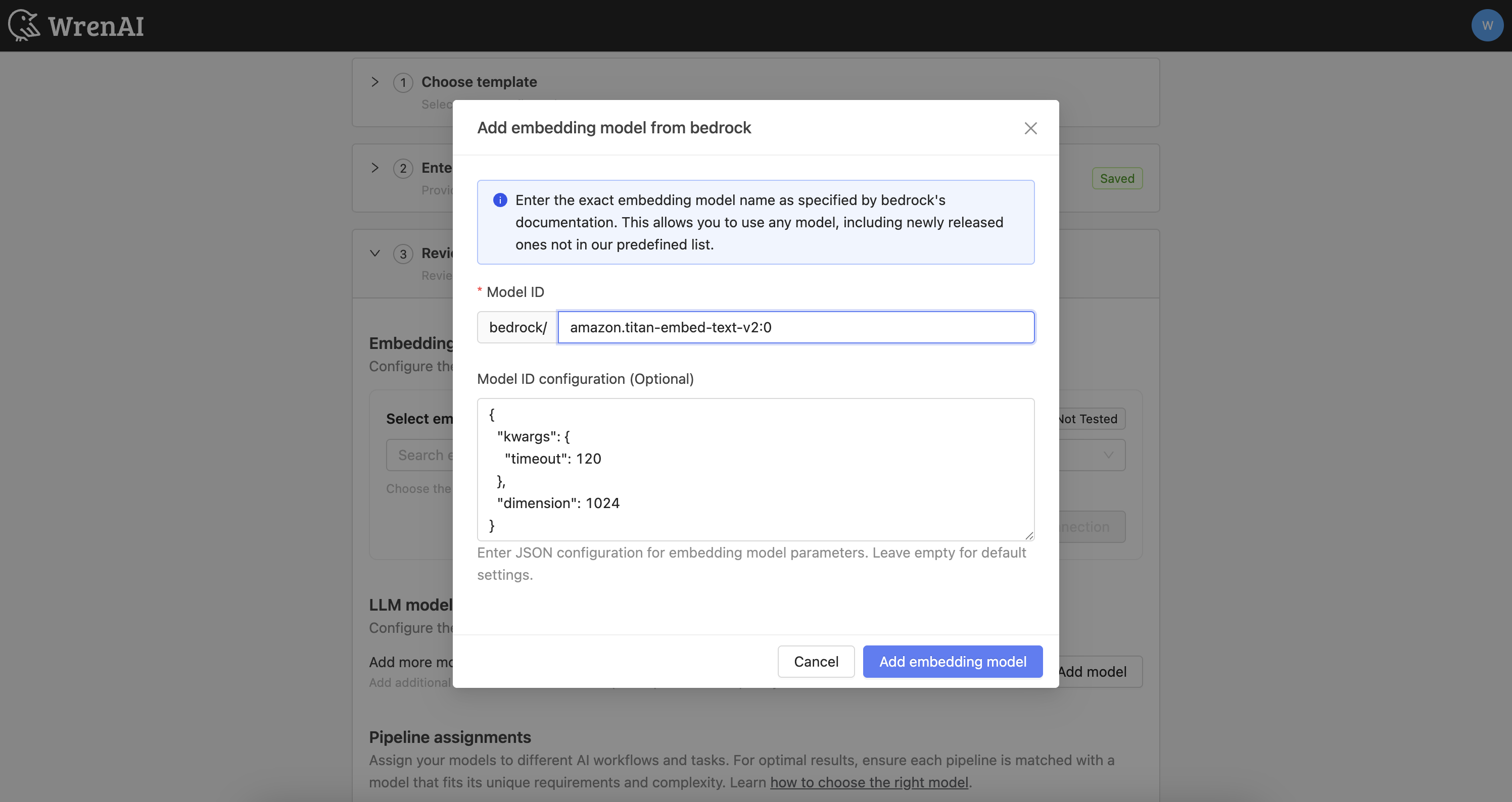

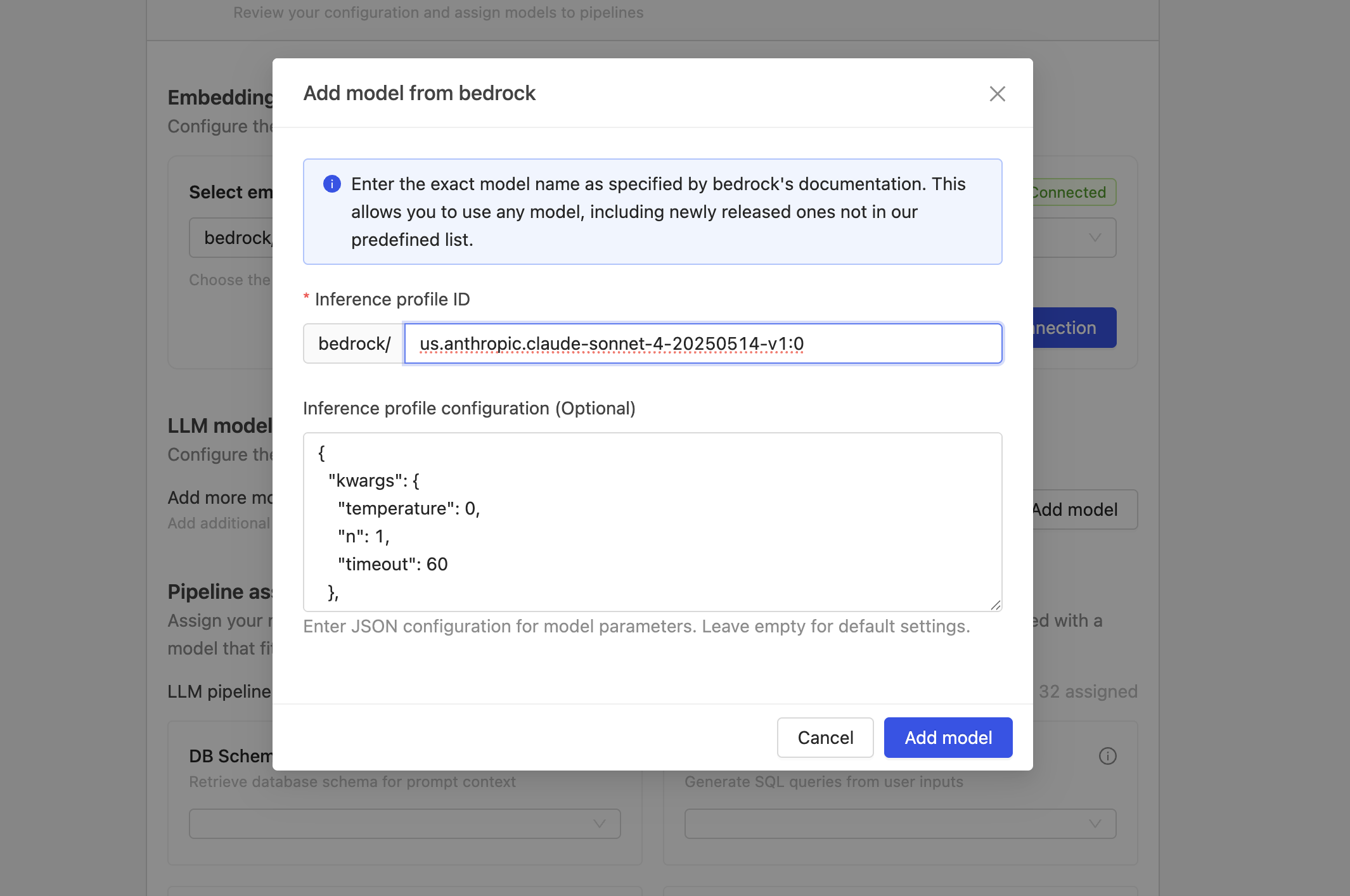

If you plan to use Amazon Bedrock Inference Profile to help track usage and cost when invoking a model. Please add model_id(inference profile ARN) in the configuration while setting up the embedding model or LLM model in Wren AI. For model name in the configuration, you could simply use the inference profile ID, as long as it belongs to the same inference profile.

Example of complete configuration for embedding model:

{

"kwargs":{

"timeout": 600,

"model_id": "arn:aws:bedrock:xxxxxx"

},

"dimension": 4096

}

Example of complete configuration for LLM model:

{

"kwargs": {

"n": 1,

"seed": 0,

"timeout": 600,

"max_tokens": 4096,

"temperature": 0,

"model_id": "arn:aws:bedrock:xxxxxx"

},

"context_window_size": 240000

}

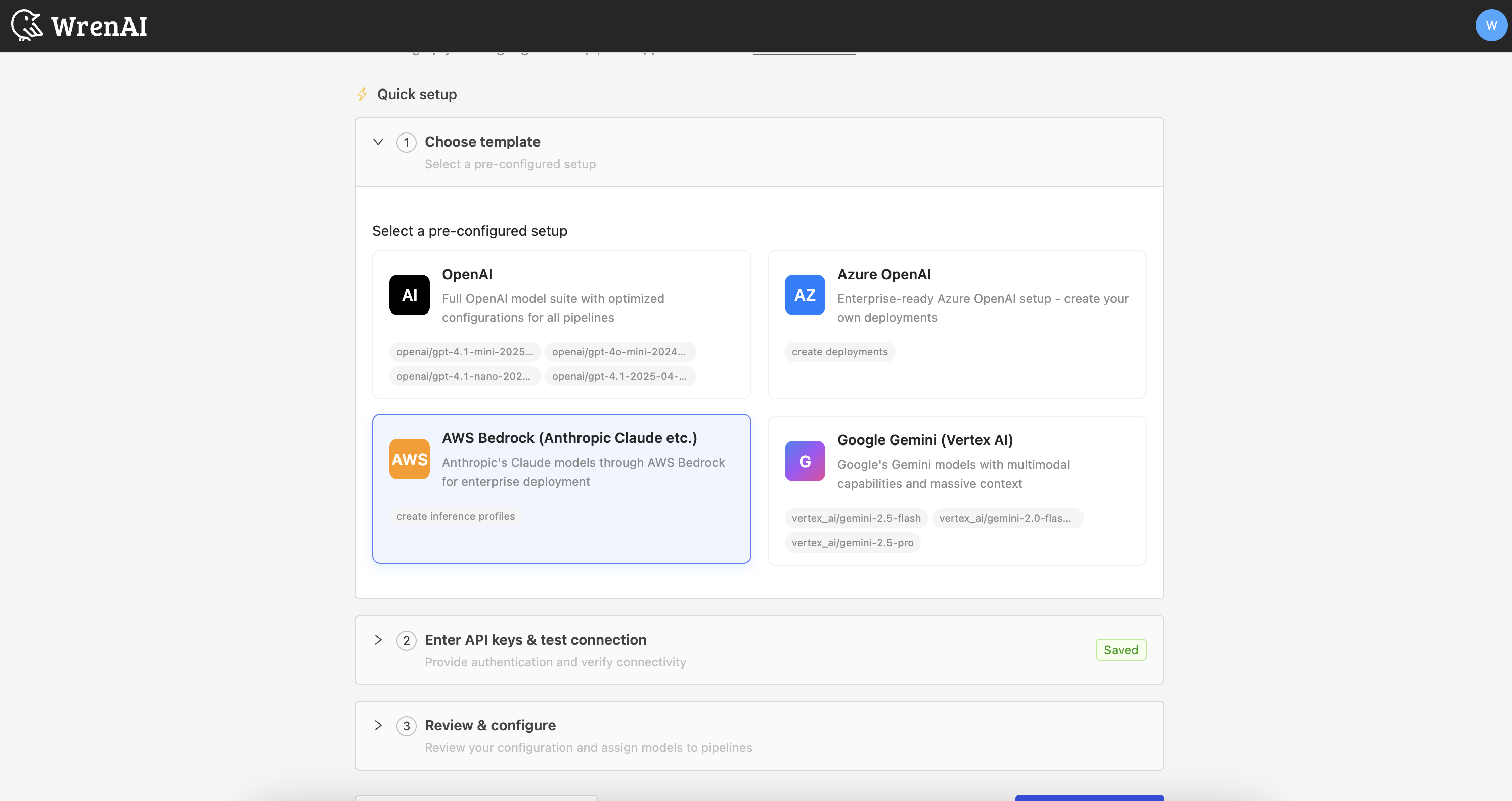

Steps (Quick setup)

1. During onboarding, select "Quick setup" and then "AWS Bedrock".

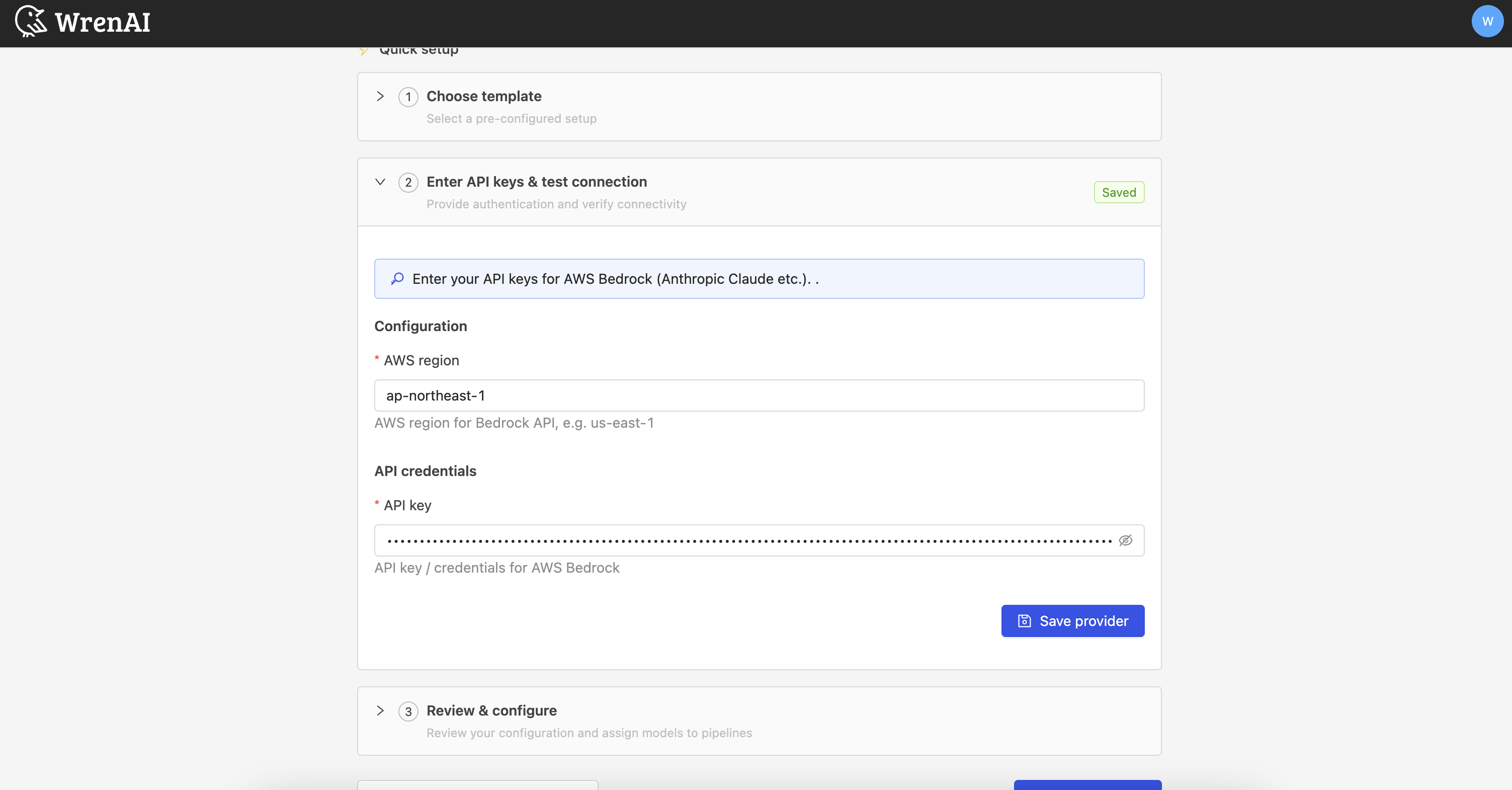

2. Enter your region and AWS Bedrock API key.

3. Select an embedding model.

Now, let's go to the AWS Bedrock console to select a embedding model.

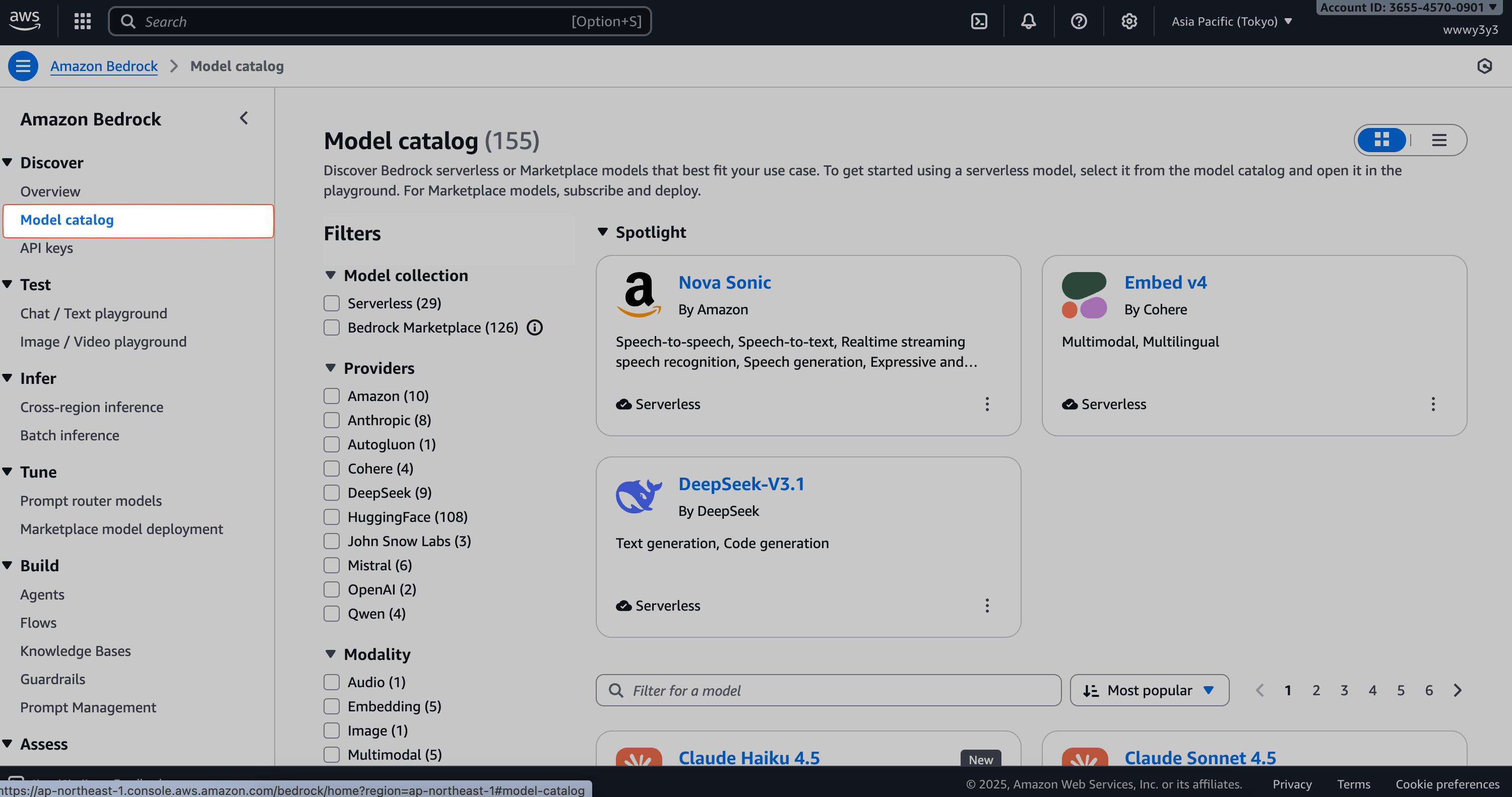

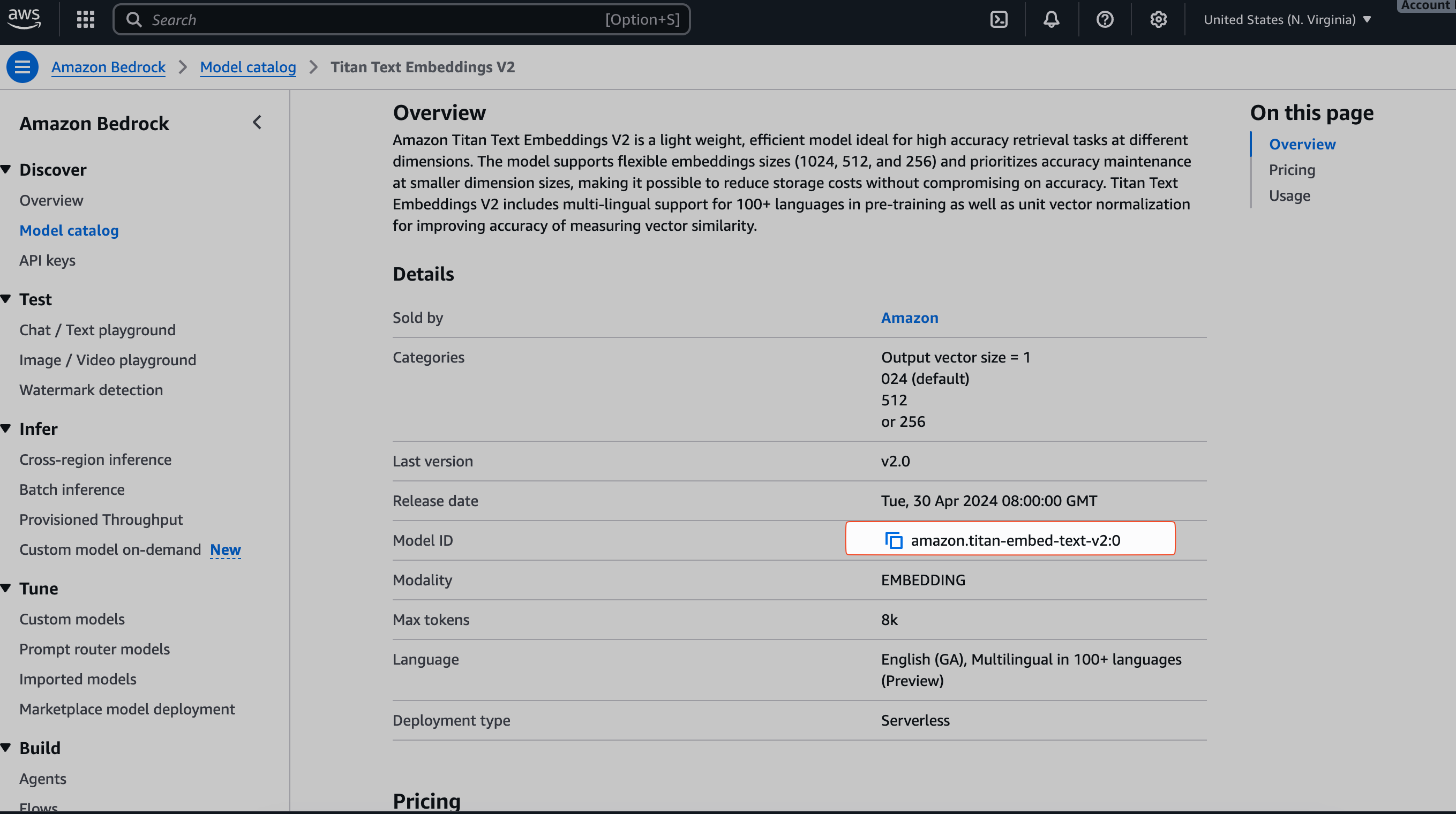

Visit the AWS Bedrock console -> "Model Catalog".

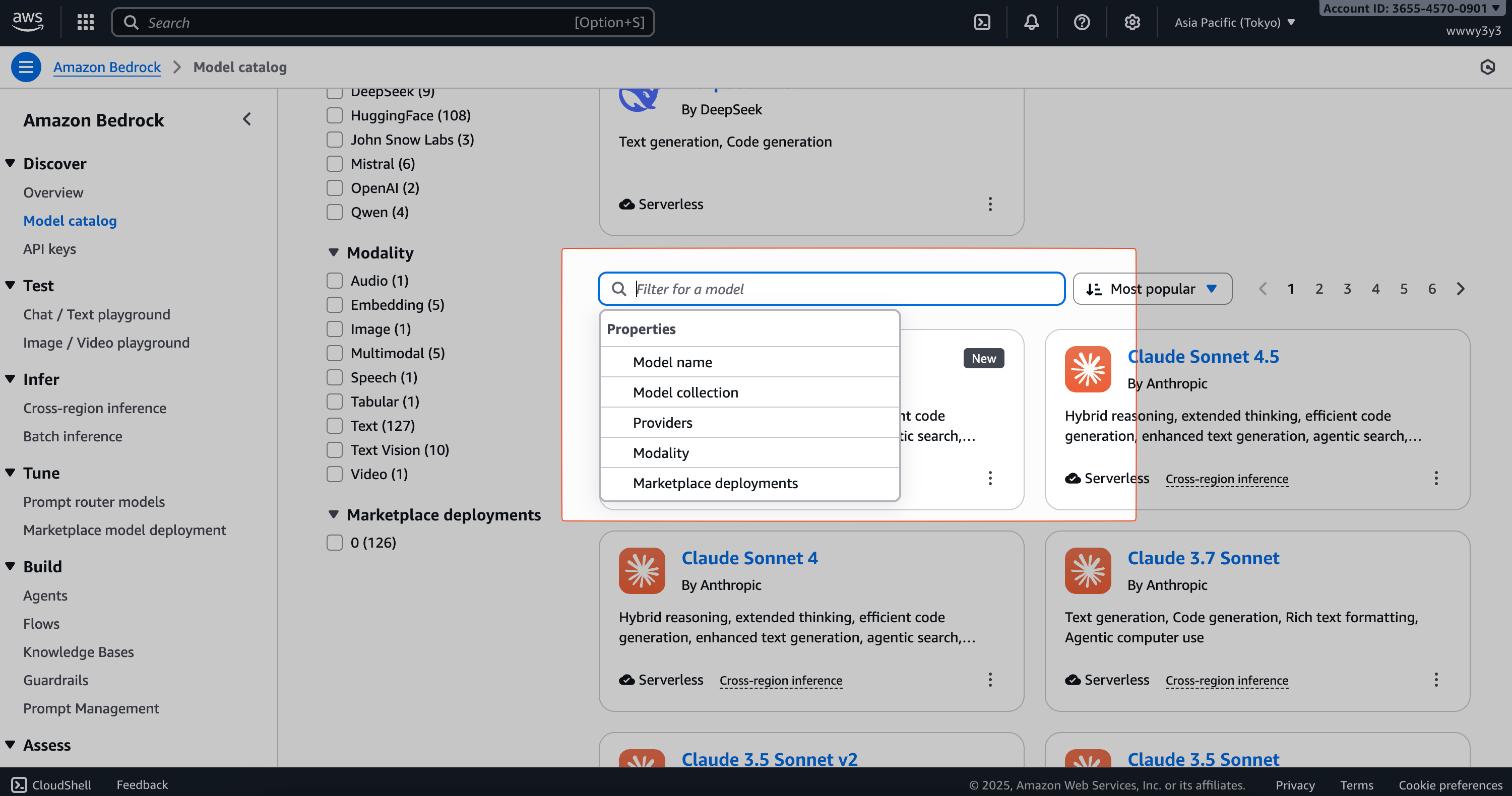

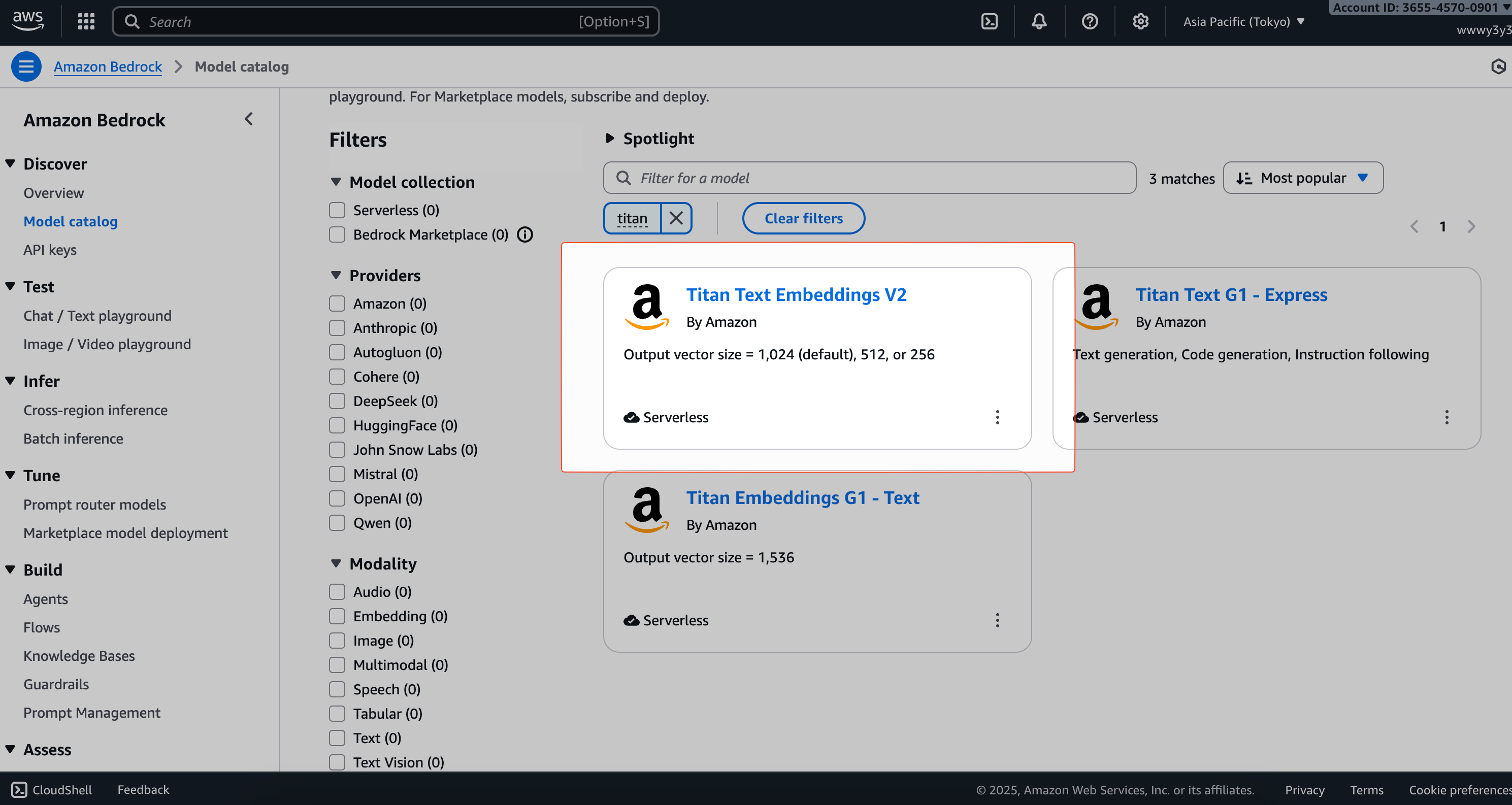

Then, Search for "titan" in the search bar.

Click into the "Titan Text Embedding V2" model. Copy the model ID.

Back to Wren AI, enter the model ID.

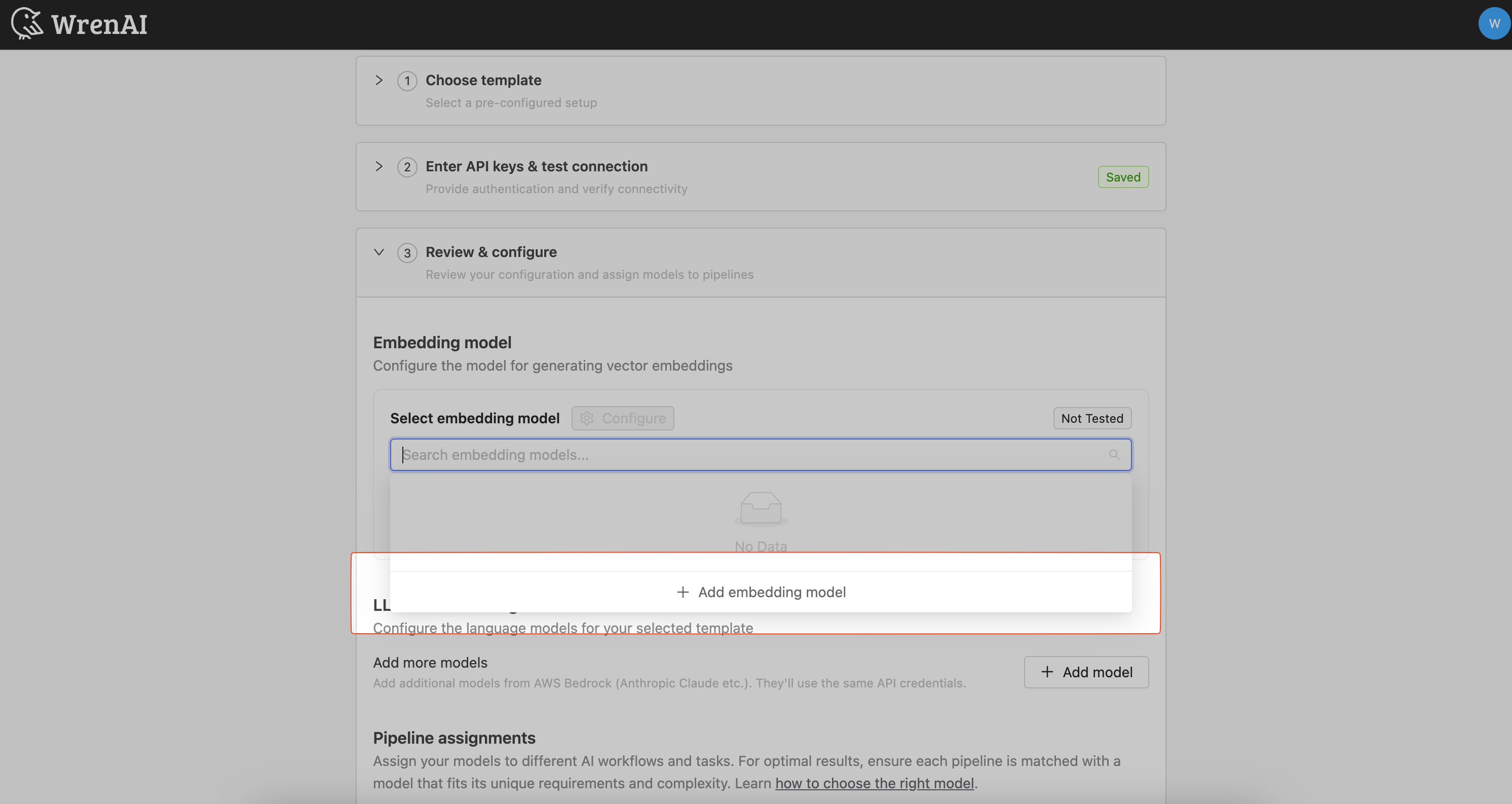

At the "Embedding model" section, in the input, click "Add embedding model".

Then, at the form, fill in the model ID you copied from the AWS Bedrock console.

The configuration is already filled in for you. Click "Add embedding model".

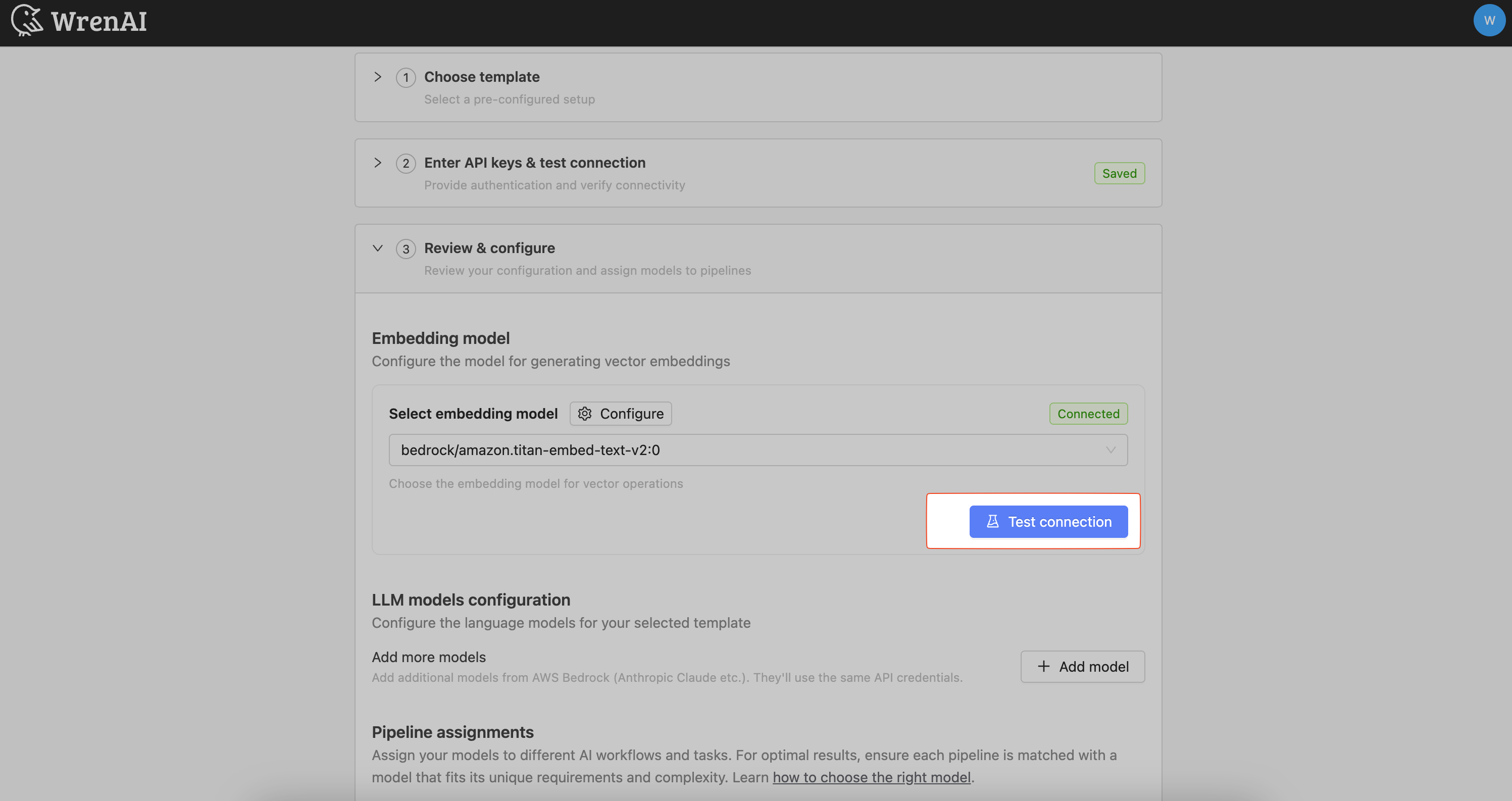

After that, click "Test connection" to verify the embedding model is working.

4. Select a LLM model.

Now, we're adding two LLM models to Wren AI - Claude 4 Sonnet and Claude 3.5 Haiku. Let's go to the AWS Bedrock console to copy the "Inference Profile ID" for each model.

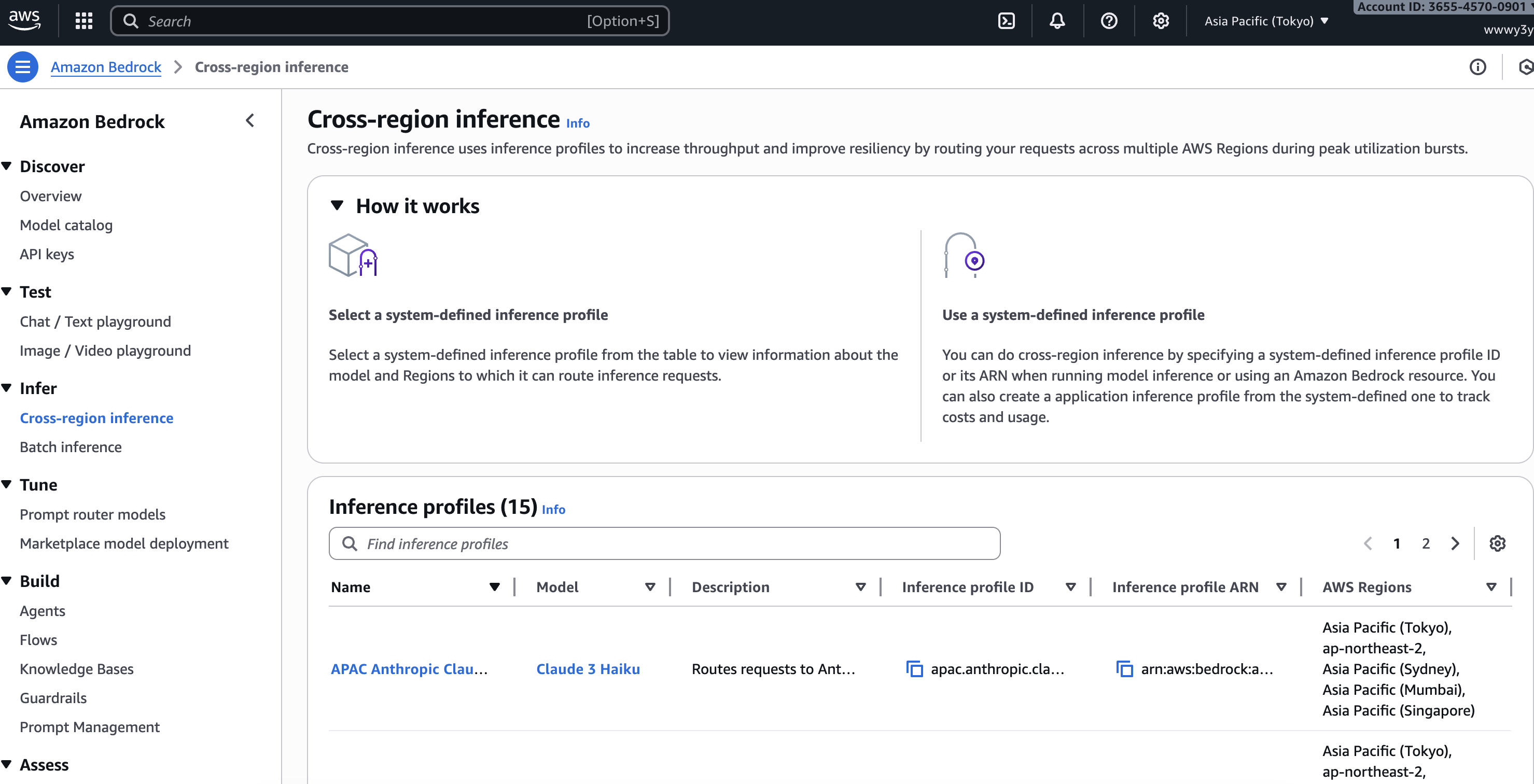

Visit the AWS Bedrock console -> "Cross-region inference".

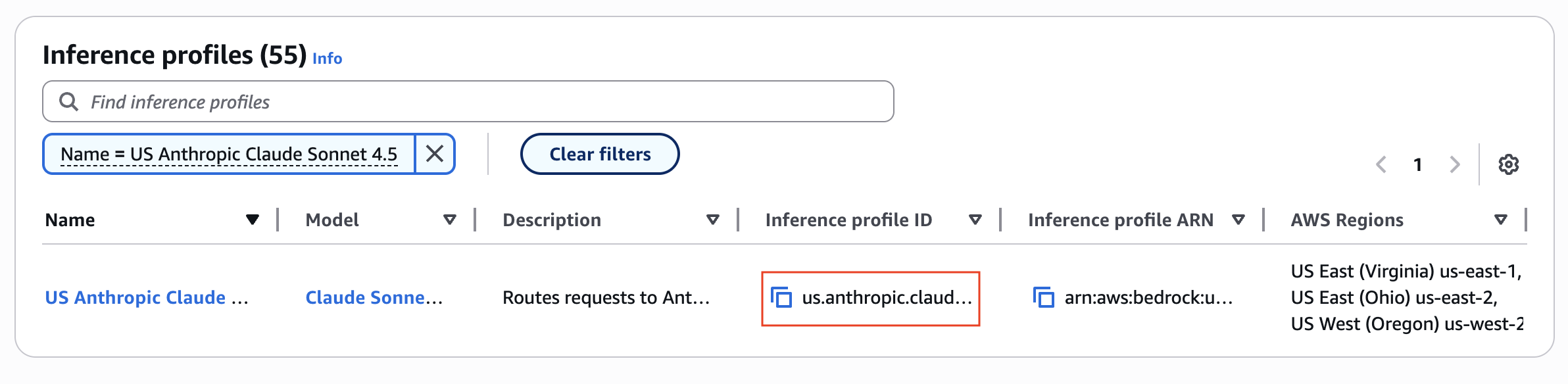

Search for "sonnet 4.5" in the search bar and find the "Claude 4.5 Sonnet" model. Copy the "Inference Profile ID".

Back to Wren AI, click "Add model" and paste the "Inference Profile ID".

5. Assign the models to pipelines.

Now, please assign Claude 4.5 Sonnet to all pipelines. Then, click "Complete setup" to complete the setup.