Langfuse Setup

Langfuse is an llm engineering platform that helps you monitor, debug, and optimize your ai pipelines. It supports self-host and cloud version. With Langfuse, you can more easily debug and monitor your ai pipelines to understand what's happening under the hood in your ai pipelines.

Wren AI supports Langfuse for ai pipelines tracing and monitoring. After you have installed Wren AI, you can start to use Langfuse by following the steps below.

In this guide, we will use the cloud version of Langfuse. If you want to use the self-host version, please refer to the Langfuse documentation.

In order to successfully connect to your own Langfuse instance from Wren AI, please make sure these several extra setups are configured correctly:

- You have a Langfuse instance running.

- You have the correct

langfuse_hostin~/.wrenai/config.yaml. - Wren AI(which is running in docker container) could access the Langfuse instance.

How to Setup Langfuse

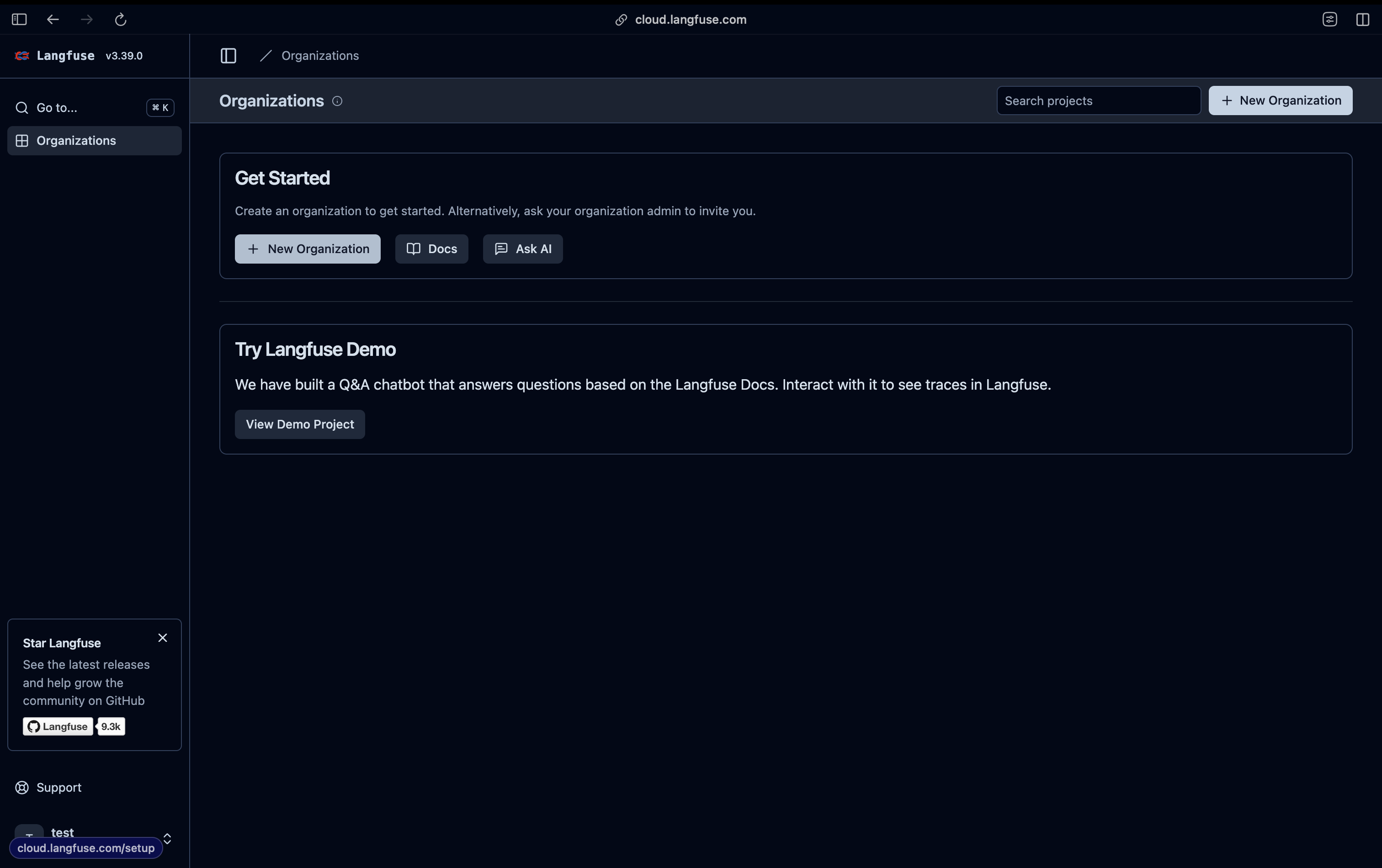

- Go to Langfuse and click the "Sign Up" button to register an account.

note

Make sure your data region is set to

EU, which means thelangfuse_hostishttps://cloud.langfuse.com, the same as the default value oflangfuse_hostin Wren AI. Otherwise, you need to change the value oflangfuse_hostin~/.wrenai/config.yamltohttps://us.cloud.langfuse.comlater.

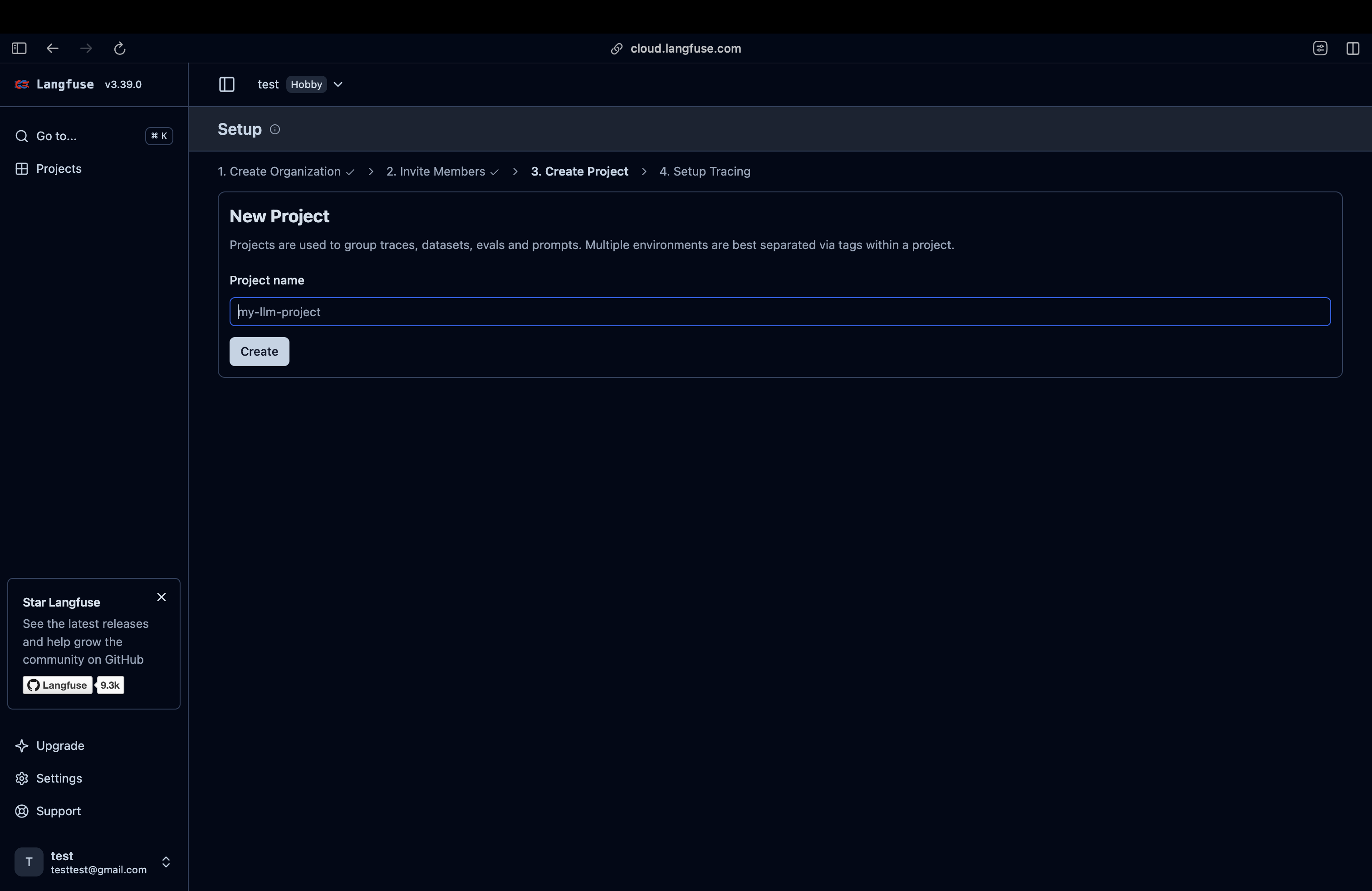

- Create a new organization and project.

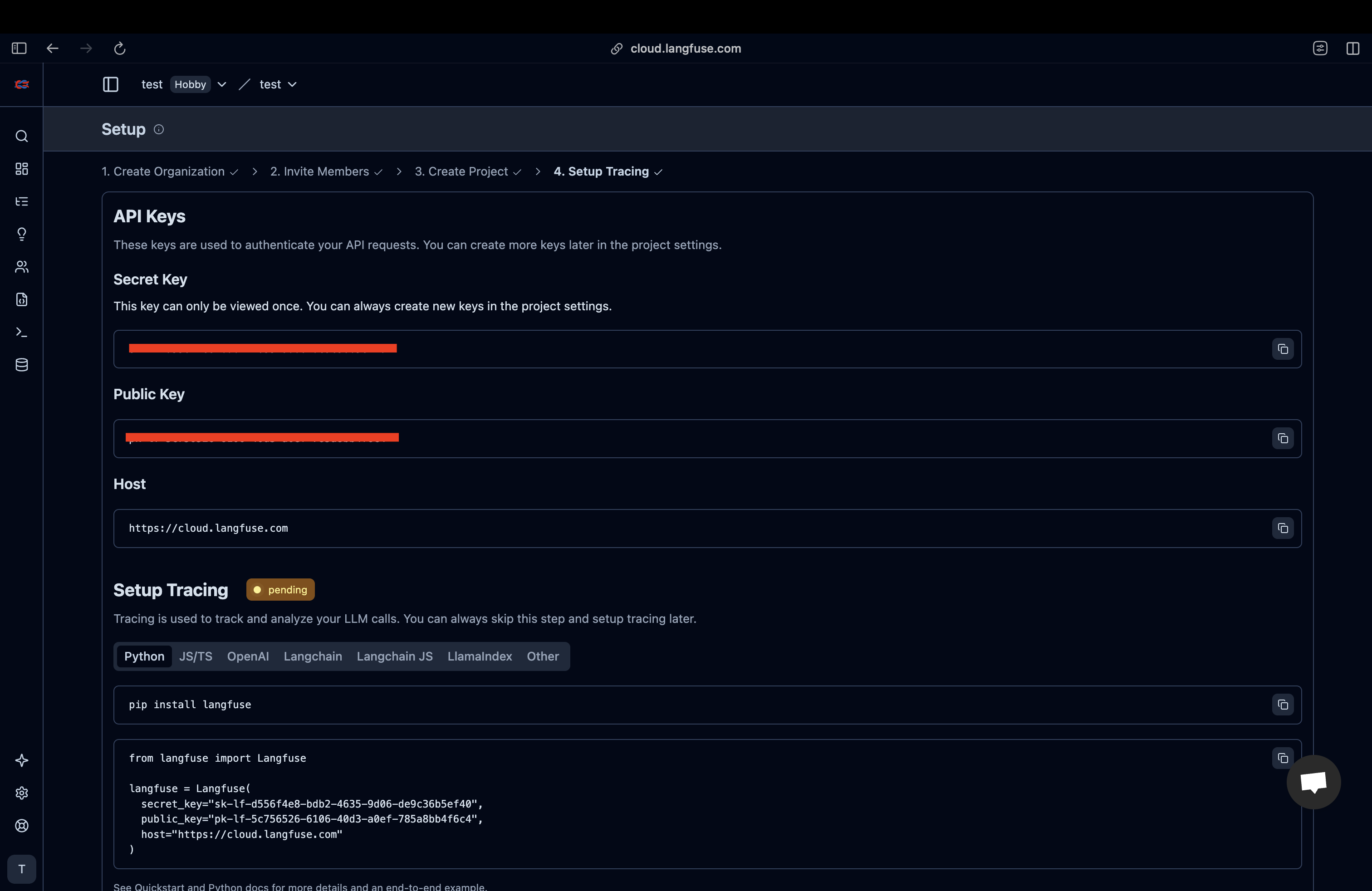

- Copy the secret key and public key from the project settings and fill them in the

~/.wrenai/.envfile. There should be two keys:LANGFUSE_PUBLIC_KEY=andLANGFUSE_SECRET_KEY=inside already.

- Relaunch Wren AI using the launcher

How to Use Langfuse

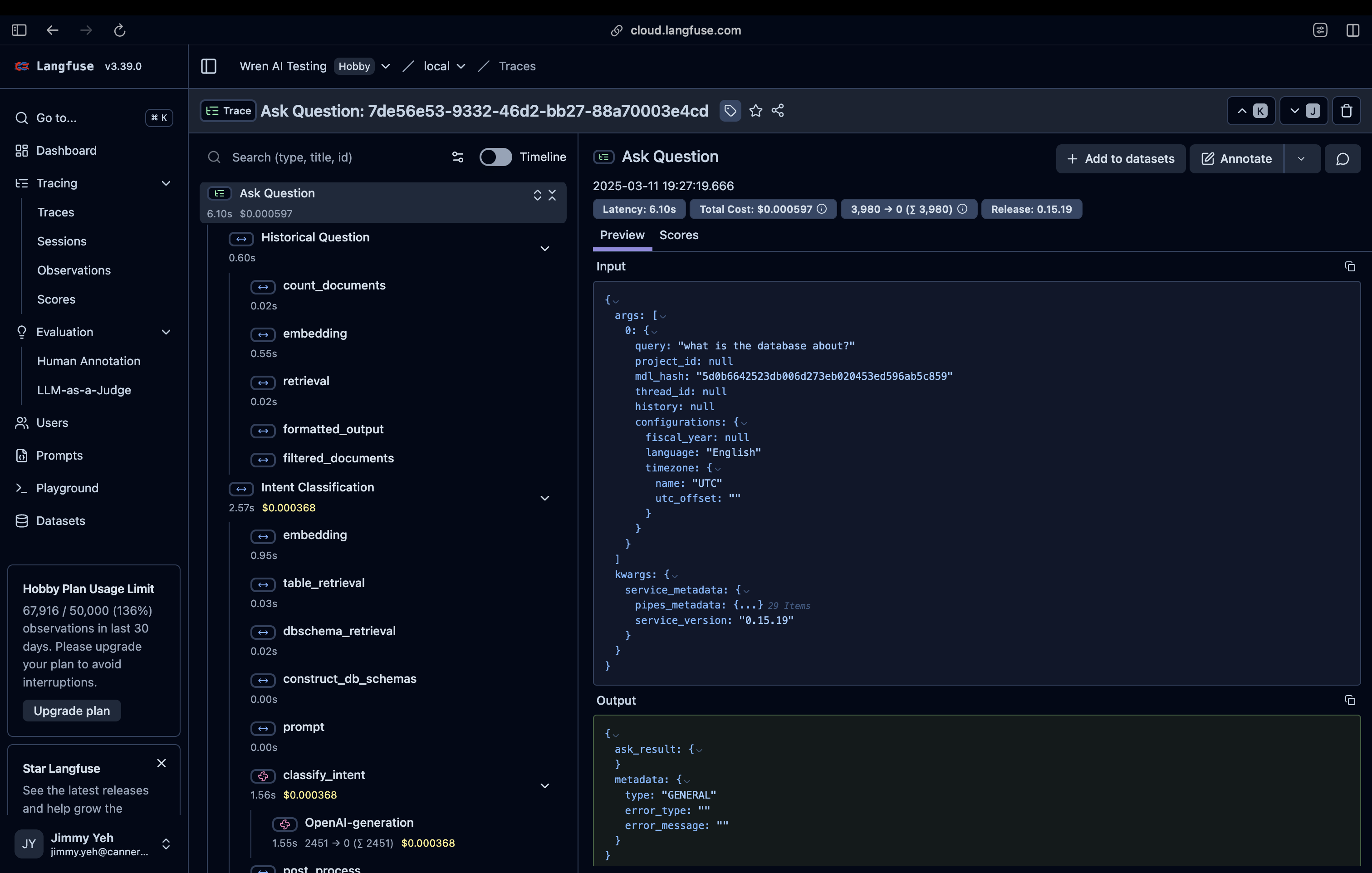

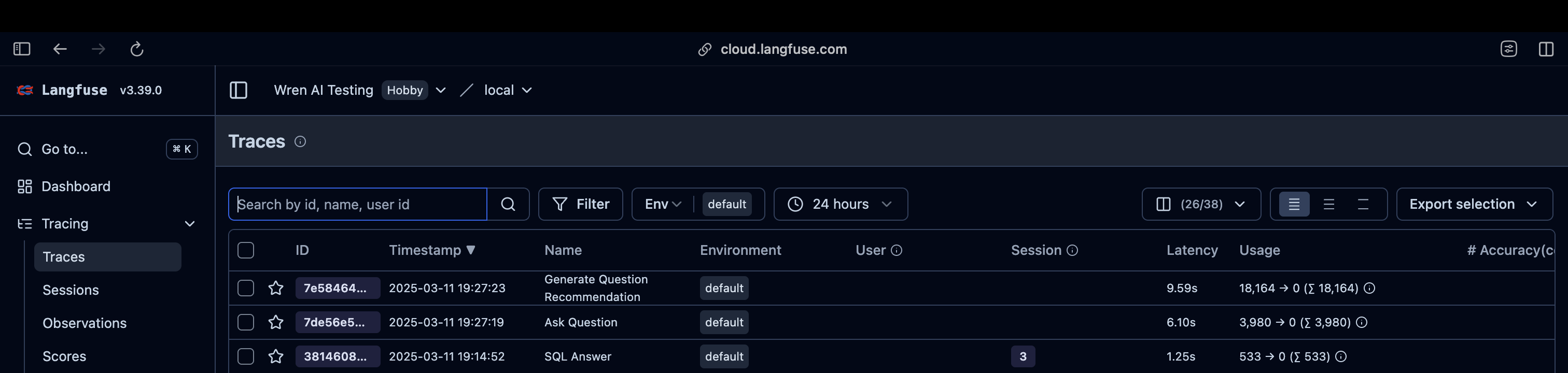

After you have setup Langfuse and restart Wren AI, when you ask a question in Wren AI, you can start to see traces in Langfuse in the traces tab in a few seconds. The IDs are clickable, and you can see the details of the traces inside.

Inside the trace details page, you can see the details of the trace, including the prompt, response, and the execution time of each step.